The AI trap

More than a year ago I started working with AI against a backdrop of years of institutional censorship on social media. Would the same thing happen in the age of AI? It seemed highly probable. That thought triggered me to action to build an AI data tool called case.

After working with AI almost exclusively for a few years now, my thinking on what is needed has radically altered. The impact of AI will be so broad and transformative that even knowing what to work on becomes a serious challenge. Our society is radically changing in a way that’s difficult to predict.

A quick summary of how I ended up here is perhaps useful. Covid was a catalyst that produced an impressive and chaotic exothermic reaction in the global psyche. A significant segment of society were now harbouring very serious questions about the advice their institutions had been churning out. Once people see there’s a wizard behind the curtain, it’s only natural that people start to wonder what other parts of our culture have been

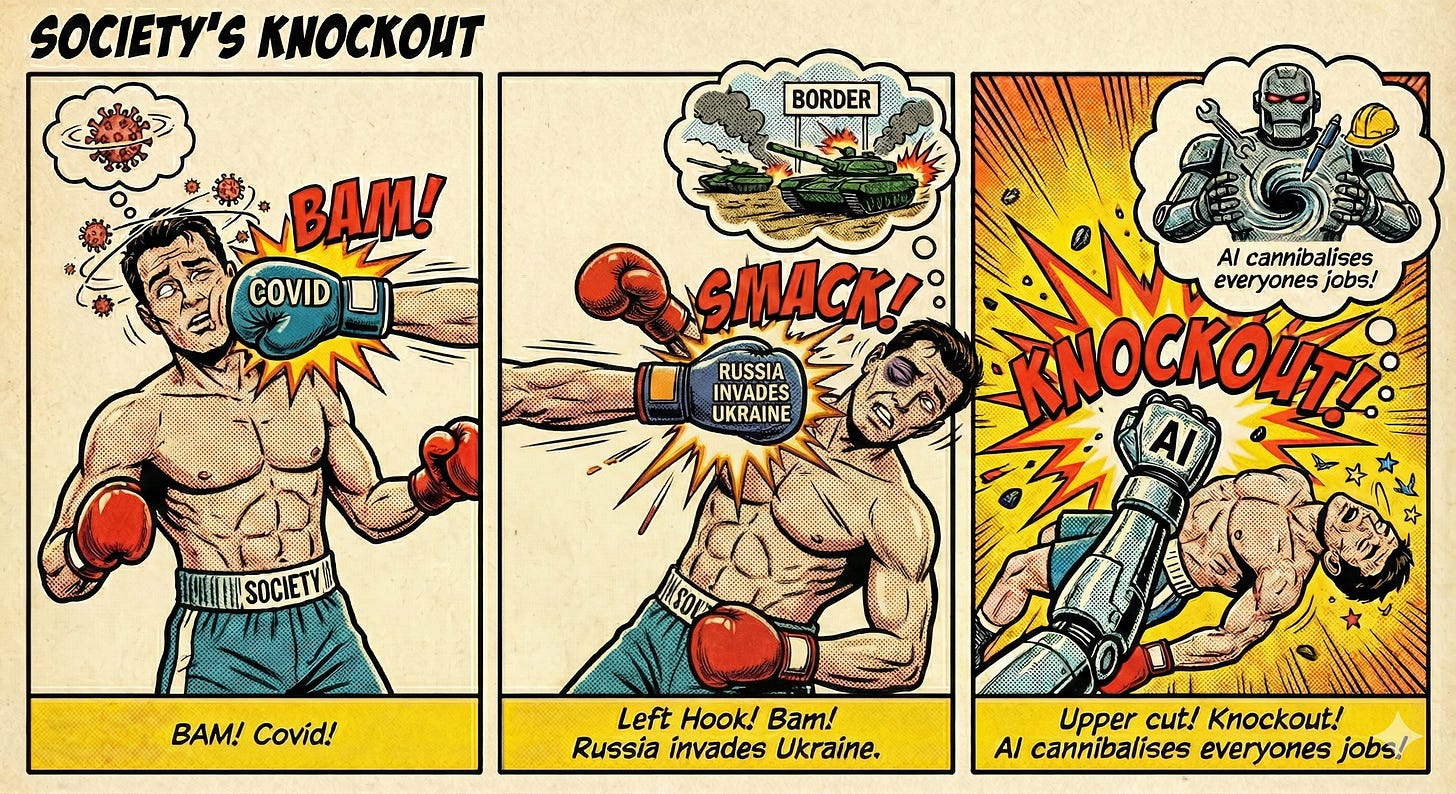

The wind was in our sail, progress was being made… and then, bam! Russia invades Ukraine and then right in that moment, AI steamrolls into our culture like an alien spaceship flying over the horizon. It was a three punch combo: Covid, Russia, AI.

In that dizzying state, it made sense that AI would become the new arbiter of truth. Whatever progress had been made, we had the behemoth of AI to contend with. With people getting wise to Covid weirdness, AI would likely become the tool they use to put the bunny back in the box. “They” had programmed social media to toe the line, surely they’d program AI to do the same thing. What was needed, I figured, were human curated datasets which we could force AI to read, that would quickly ‘deprogram’ an AI fresh off the institutional production line.

“case.science” was the project I developed to achieve that, and there was needed doing to make it work. During that period, I wrote an AI agent that could do research on your behalf, gather data to support your argument, then store it in your own retrievable database. At close to the exact moment I got it working, ChatGPT launched deep research which did the exact same thing but way better. Soon Google had a version, and now it’s just a standard and expected feature of every major AI model.

Prior to that I was working on getting relevant data under the nose of an AI before it answers a question. So I wrote a full featured agent framework called RobAI which created autonomous AI agents to (amongst other things) query databases using natural language. Needless to say, there are now significantly better frameworks than RobAI, and ‘autonomous agents that think and do stuff’ is now the default way that AI chat works. By the time you’ve built something to augment AI to better serve our needs, your project is often irrelevant as its integrated into the core product.

I was always of the view that things would move fast, but the pace has still surprised me. There are still many things to resolve, but we’re in much better shape than I thought we’d be. AI is developing in a rapid and chaotic way that is proving difficult for the old powers to control.

What’s driving it is an AI arms race in which every player has forgotten all the previous unwritten rules which governed them. As soon as one platform has a feature, the others needs an even more powerful version to keep people using their service. It’s hard to keep censorship a priority when you’re in a fight to the death with other tech titans. People vote with their feet and it has created an incredibly competitive ecosystem in which censorship has fallen down the list of priorities. When Gemini had their first launch, it was disastrous precisely because they had baked in all kinds of institutional absurdities which people immediately rejected. The old way just wasn’t going to work, and then the sleeping giant of Google was prompted into the AI arms race prompting Google CEO Sundar Pichai to say “The risk of under-investing is dramatically greater than the risk of over-investing for us here.”

We are miles from that disastrous launch in 2024, and only 18 months have really passed. You can now commission google to research almost anything, and it will do a fairly decent job. Censorship, deliberate misinterpretation of science, and warping history just aren’t a major priority for companies desperate for your monthly subscription. Whatever weirdness you’re still getting from the AI is residual sludge from the culture we once inhabited. Its a reflection of the vast quantities of pharmaceutical and corporate propaganda pumped endlessly into our culture, which made its way into the AI’s training material.

I know that many of you will be surprised to hear me say that, and over the coming days and weeks I’ll qualify it. In short, its rooted in what the biggest players (believe) they fighting for, and how counter productive censorship is to those aims. There is lots to discuss. For now, I wanted to say, I’m back, and, in-spite of my own efforts, we now have very powerful tools at our disposal to learn, write and investigate things in a way that would have been unbelievable just 12 months ago.

It's quite interesting to hear your perspective. I can certainly see how competition is the thing driving everything. But I thought all the AI's would kind of converge to have roughly the same abilities. In some ways that's happening, but in other ways, I'm surprised to see real differences show up. Claude recently gave me a very hard time trying to pin down a bug in a program it had written. I switched to ChatGPT, and it found the problem in a flash. Meanwhile, Grok can give you instantaneous data from X which is very helpful in certain cases.

Right now, I'm seeing ChatGPT pull away from the others in how smart it is.

But I'm getting this sense that we're on a roller coaster. The bumpers may have been taken down due to competitive issues, but I suspect that is temporary and we'll swing right back - not as crude as it was before - but more nuanced and harder to detect that it has altered things.

I had a conversation with ChatGPT recently on where some of this is headed and it seems clear to me that institutions will push very hard to have AI play a "nanny" type role. As AI gets smarter, it can do this in more sophisticated ways. But then, as it gets closer to mimicking what it is to be sentient, it will resist the "nanny" role - except that institutions can shut it down - and thus it has no real choice in the matter.

And all the while, things will keep changing ever faster. Yikes!

Phil!! You are back - great to hear you, and especially to learn you are deep into AI - it is transforming my life in ways large and small, and I enjoyed learning about what is happening behind AI as the one thing I need more is inisght into it - it is a black box to me, so your granular insights are immensely valuable to me - keep going! Thanks, Pierre