What does the data say about ____

anything you want..

case.science allows large collections of scientific studies to be shared and queried in a straight forward way. Powered by AI, case can answer any user’s question about a particlar dataset, effectively allowing users to ‘talk with’ thousands of science papers on a particular topic. Its about empowering ‘normal’ people to see what the data says about a particular subject.

The problem case aims to solve is the social contagion of people saying “there’s no evidence of [insert well documented phenomenon]!” Well, what if there were nicely organised collections of scientific data on all kinds of topics that could help keep these conversations moving? So you could reply, “well actually, have a look at this: case.science/case/293/details”

The problem has been creating those collections of scientific studies. And today, that’s something that case has moved one step closer towards solving. When you ask a question, caseAI now tries to clarify *exactly* what answer you’re looking for. Good answers come from a good questions… and so after a quick chat with the AI, you’ll have come up with a research brief.

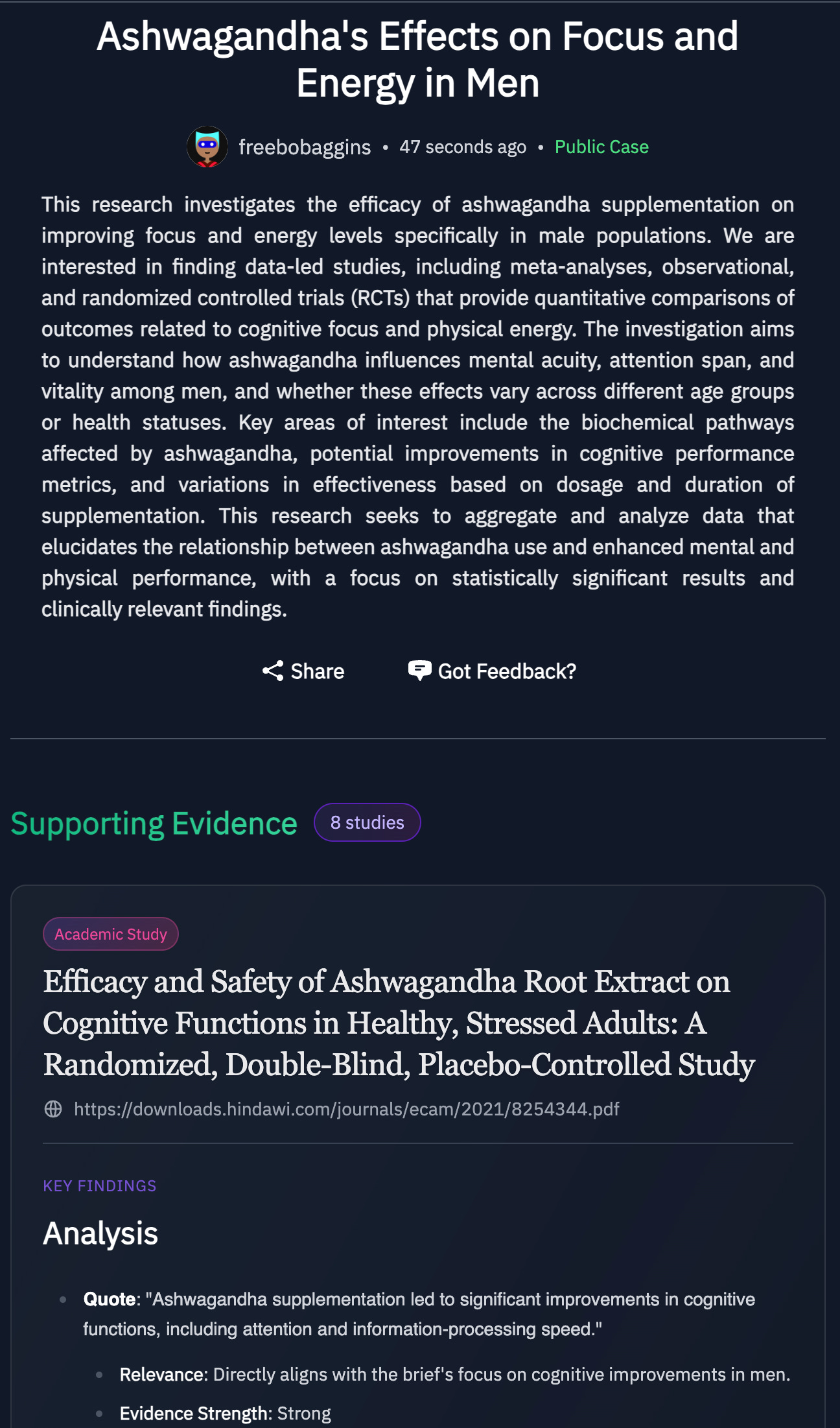

Now the AI has a proper brief, it passes it to an AI research team. Those AI agents then search the scientific literature on your behalf. They read dozens, sometimes hundreds of abstracts. When they find things they think are relevant, they add the studies to a ‘case file’ for you, summarising what they found and how its relevant to the research brief you set. They can even generate new ideas for search strategies based on what they have read so far.

When the process completes after a few minutes, caseAI will tell you what it has found. If you check your mycases button, all the things you’ve asked about will be there, just click on your case. What you see is a summary of the studies the AI found, and where they are relevant to what you asked. This ‘case’ is immediately shareable with anyone you like, just copy and paste the link.

What’s more, the ‘talk to the dataset’ functionality is still there. Just click ‘start discussion’ at the bottom of the page and you’ll be able to ask any questions you like about the science papers in that particular case. This too is immediately sharable with anyone, so they too can ask questions or read the summaries. In this format, case.science is already very powerful for democratising access to scientific literature. Balancing that aim with having to fund the project is not easy; currently, the platform allows one free ‘deep search’ per day - so use it wisely. For unlimited searches, you’ll need to upgrade. Do you have thoughts about this business model? Let me know.

There’s a long long way to go from here, but this is a great first step. I know that what many of us want is an AI that drops its cultural training and reports back all complex hidden truths, but this is a very difficult problem to solve. AI reflects the culture it is trained in, so to subvert that culture means getting the best ‘well I never…’ science and datasets under its nose. The trick is in finding the lesser trodden scientific paths and giving the AI tools that can find those paths. Right now, there’s still a huge amount I want to add: more places for the AI to search, more resources for it to read, and new processes to follow. It will take time to add all of this, but I am confident that the process caseAI uses can be significantly and continually improved.

Finding research that cuts through our sciencewashed culture is not easy. There’s a nack to finding research that flies in the face of common expectations, but those methods should be replicable. By working with top tier researchers, we can build a process for case that is inspired by their methods, sources, and process. Clearly where case looks for data, and exactly what process it follows, can all be iterated, modified and consistently improved. Ideally, a single question would lead to an exhaustive list of science papers on that topic in a single ‘deep search’, but there’s a way to go before that’s achieved.

But there’s a lot that can be done before we reach that goal. For example, in the culture of computer programming, it’s common to see ‘pull requests’. This is when another coder sees an error or opportunity in your public code base. They publicly post some code that addresses the issue then request their ‘patch’ to be merged into your codebase. This culture of collaboration has created github, the greatest code repository on earth, and it has led to some incredibly sophisticated codebases which are maintained entirely by collaboration. Why isn’t the same possible with science? I believe we can replicate ‘pull requests’ with case.science.

Perhaps your case is so similar to someone elses that the two cases can be merged, and the case is now owned by two users. Perhaps you’ve got studies that would be a great fit for someone’s public case. You’ll be able to request that they’re added, and then those cases become living breathing resources about that particular subject. There’s plenty that’s possible when you leverage mass collaboration.

For now, case is at version 1 of its new direction: AI deep search across the scientific literature. So sign up, give it a go, and if you love the project please consider a paid subscription. Right now, the project is just me, my substack subscribers, and a gnarly codebase. If you know of people, businesses or investors who’d like this, please do pass me on. Check out the project at the link below:

As ever, the audience I get is the audience you generate. Shares, comments, and recommendations are most welcome! Comments open.

Science needs findings. Funders dictates the findings.

Phil, Like your comparison to the way open source is developed by volunteers. Is case.ai open source? I echo mois78. How will you avoid those funders. Is FLCCC a sponsor? That might keep the flies away. Do you have a disinformation agent working in the background grading the likelihood of disinformation.Take a look at ground source news for ideas. Wishing you the best of luck in this endeavour.